How to Deploy ComfyUI On Modal with an API

Deploying ComfyUI on Modal as an API

Let’s explore how to deploy ComfyUI on Modal as well as the pitfalls involved. Modal is an infrustructure platform which allows you to deploy custom AI models at scale. It is similar to platforms like fal.ai and Replicate. ComfyUI on the other hand is a node-based interface for Stable Diffusion and other Generative Workflows. It is used to compose generative workflows which you can then deploy to the cloud.

We will use the Modal Comfy Worker community repository by Tolga.

Components of Modal Comfy Worker

Modal Comfy Worker consists of snapshot.json , prompt.json, and workflow.py. prompt.json contains the ComfyUI workflow while snapshot.json mirrors snapshots in ComfyUI manager and lists all custom nodes involved in the workflow. workflow.py is the entrypoint for Modal - Modal will run workflow.py to start all containers involved. We will cover how to configure each of the three files.

Modifying Prompt.json

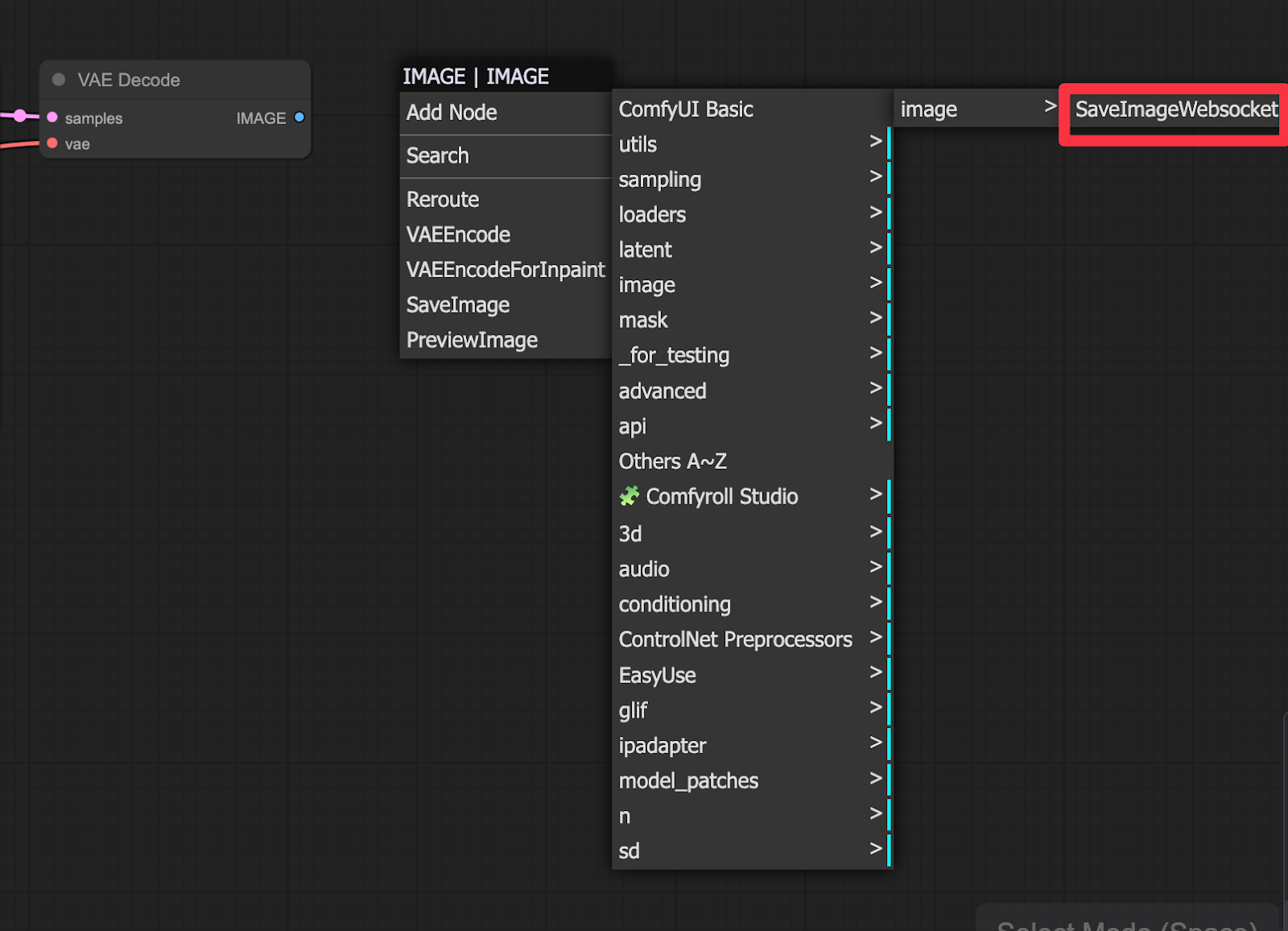

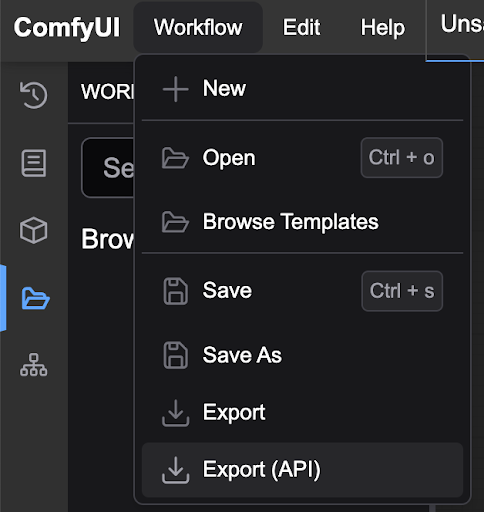

Export ComfyUI as an API using the Desktop Application or similar. End the workflow with a SaveImageWebSocketnode. This signals to workflow.py to return the image in the API response as a base64 string as opposed to saving it in the file system. Here’s how to add the SaveImageWebSocket node.

Check that all custom nodes are installed and export the workflow via the UI interface.

Check the JSON to ensure all custom nodes are exported.

Customizing Snapshot.json

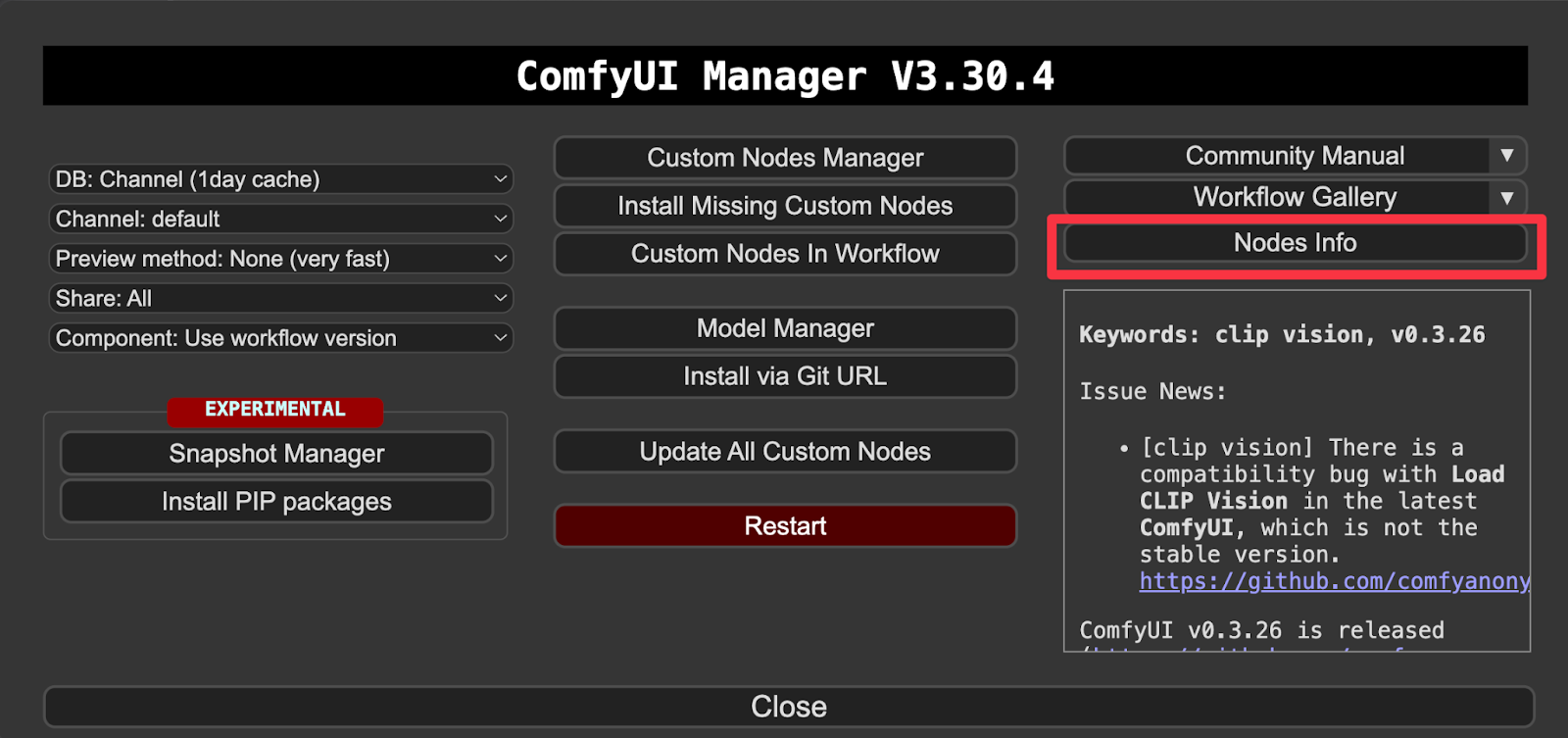

Use the ComfyUI Manager’s Node Info option to help find the appropriate Github repository to use in snapshot.json

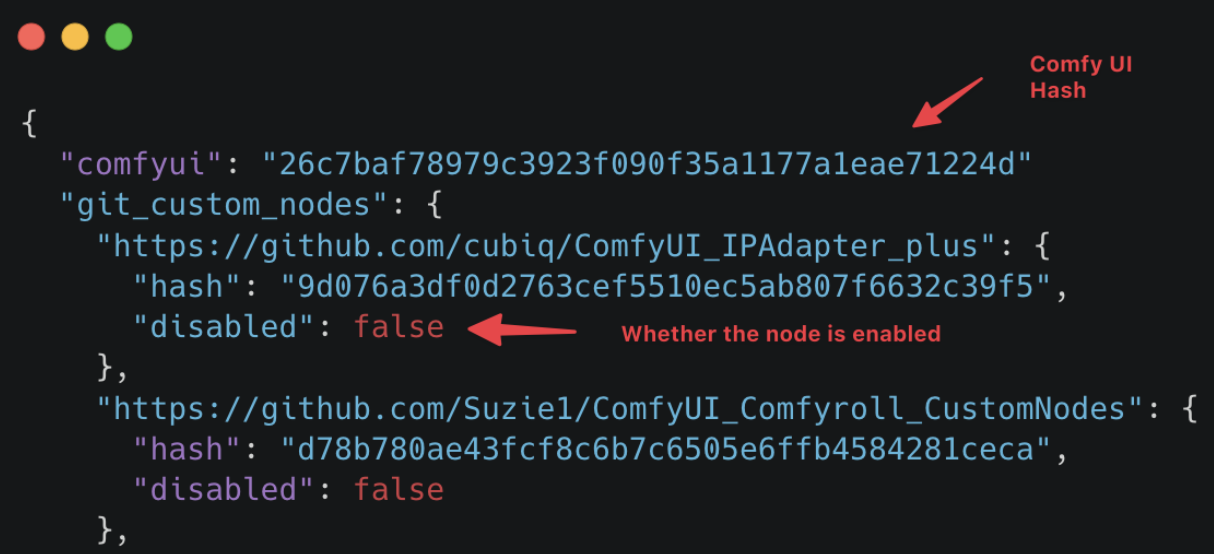

Here’s an example of snapshot.json with a breakdown of what each field does:

Ensure that the Github hash of a node used has a matching Github release. The file_custom_nodes field allows you to add a node manually without having to reference a git repository in comfy. This is rarely needed. On the other hand, pips is used by comfyui-manager to reliably install the correct package versions for all the custom nodes. Say if we needed to run huggingface 0.26.0 on all nodes.

Disabling the UI

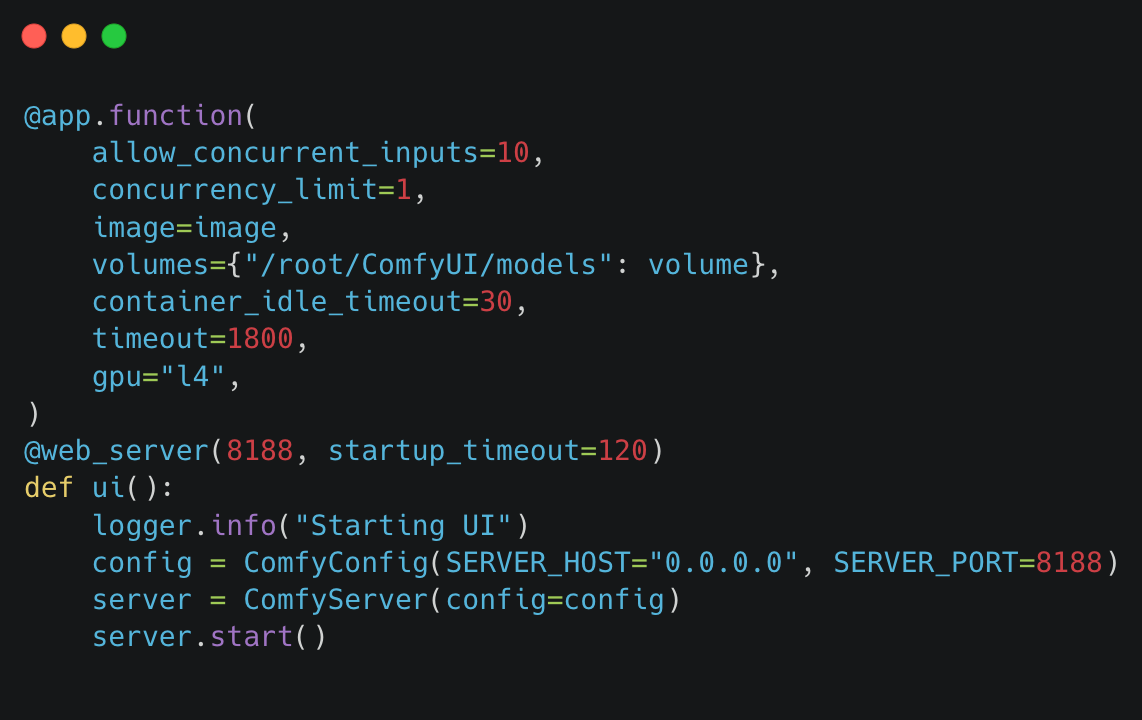

Manage the UI in the workflow.py file. You can comment this section to disable the UI.

Troubleshooting

Issues faced

When troubleshooting the workflow, I faced the following issues:

- Weights and paths exported with forward slash

a.

controlnets\controlnetswas exported rather thancontrolnets/controlnetswith Linux Separator - Custom nodes were not installed on the UI and consequently not exported in the prompt.json

- Not all

prompt.jsonfields are populated after export. For instance, you need to populate LoraLoaderFromURL - The

ComfyUI-GlifNodecustom node was using an outdated Huggingface dependency. a. There was a breaking change which removed cache_download in v0.26.0 so needed to downgrade to v0.25.0 - For installation of the Comfy UI IP Adapter Node there was an implicit requirement to download weights and place them in specific directories. It was not possible to directlly download via

models_to_downloadinworkflow.pyas the node expects the weights to have a name ofCLIP-ViT-H-14-laion2B-s32B-b79K.safetensorswhile the default name on Huggingface was models.safetensors. I could not find a way to rename so I had to download and the weights and rename theme before runninguv run modal volume put <volume> <weights>where needed. - There was a need to manually add images used in the workflow.

a. Modify

lib/image.pyto include the local file:

+ .add_local_file(local_image_path, "/root/ComfyUI/input/lady.png", copy=True)

2. Then modify workflow.py to accept the image:

- image = get_comfy_image(

local_snapshot_path=local_snapshot_path,

local_prompt_path=local_prompt_path,

+ local_image_path=local_image_path,

Deployment

Configure a github secret: uv run modal secret create github-secret GITHUB_TOKEN=<your_github_token> so that ComfyUI can pull the custom nodes from Github as needed. You’ll need to use uv so that python library dependencies are installed. Modal executes the entrypoint script during the build process so we’ll need to have the libraries used by the entrypoint, workflow.py, installed. Run uv run modal deploy workflow to deploy.

Testing the flow

Test the flow by making a request to the /infer_sync endpoint

curl -X POST https://myapp--comfy-worker-asgi-app.modal.run/infer_sync -H "Content-Type: application/json" -d '{"prompt": "A dog sitting on a char in the middle of a fire with a positive expression saying everything is fine"}’

You should receive a base64 image as a response under the output_image field

We thank Togla for creating the repository and Brushless.ai for sponsoring this work.